Out-of-the-box AI models offer impressive capabilities but often fall short when applied to specialized, domain-specific data. A custom-built solution provides distinct advantages:

- Leverage Proprietary Knowledge: Utilize internal documents and datasets for deeper insights.

- Ensure Data Privacy: Maintain sensitive information within your infrastructure.

- Enhance Accuracy: Fine-tune responses to align with industry-specific terminology and context.

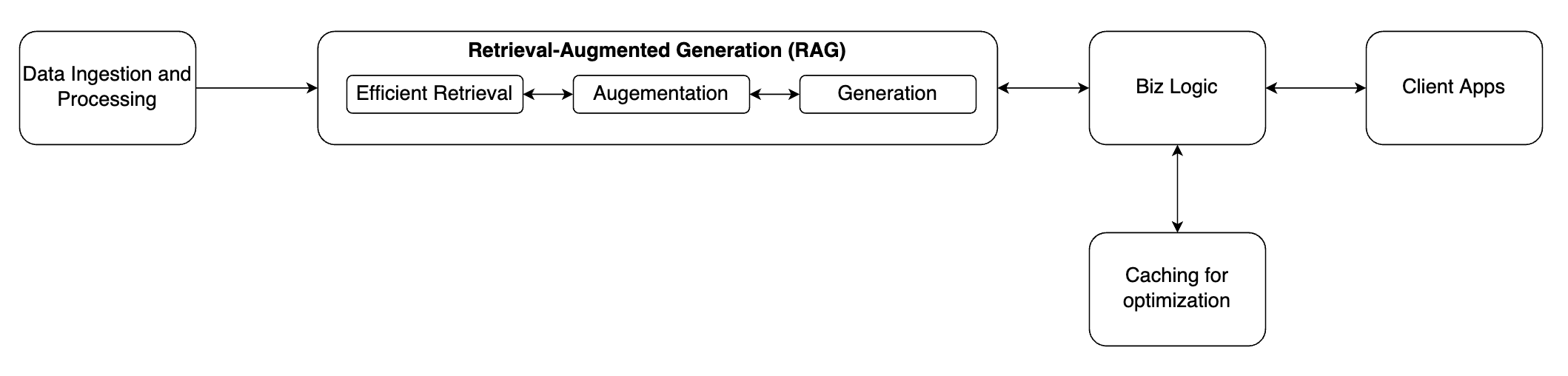

Critical Components of the System

Building Block of AI based Chatbot system

Data Ingestion and Preprocessing

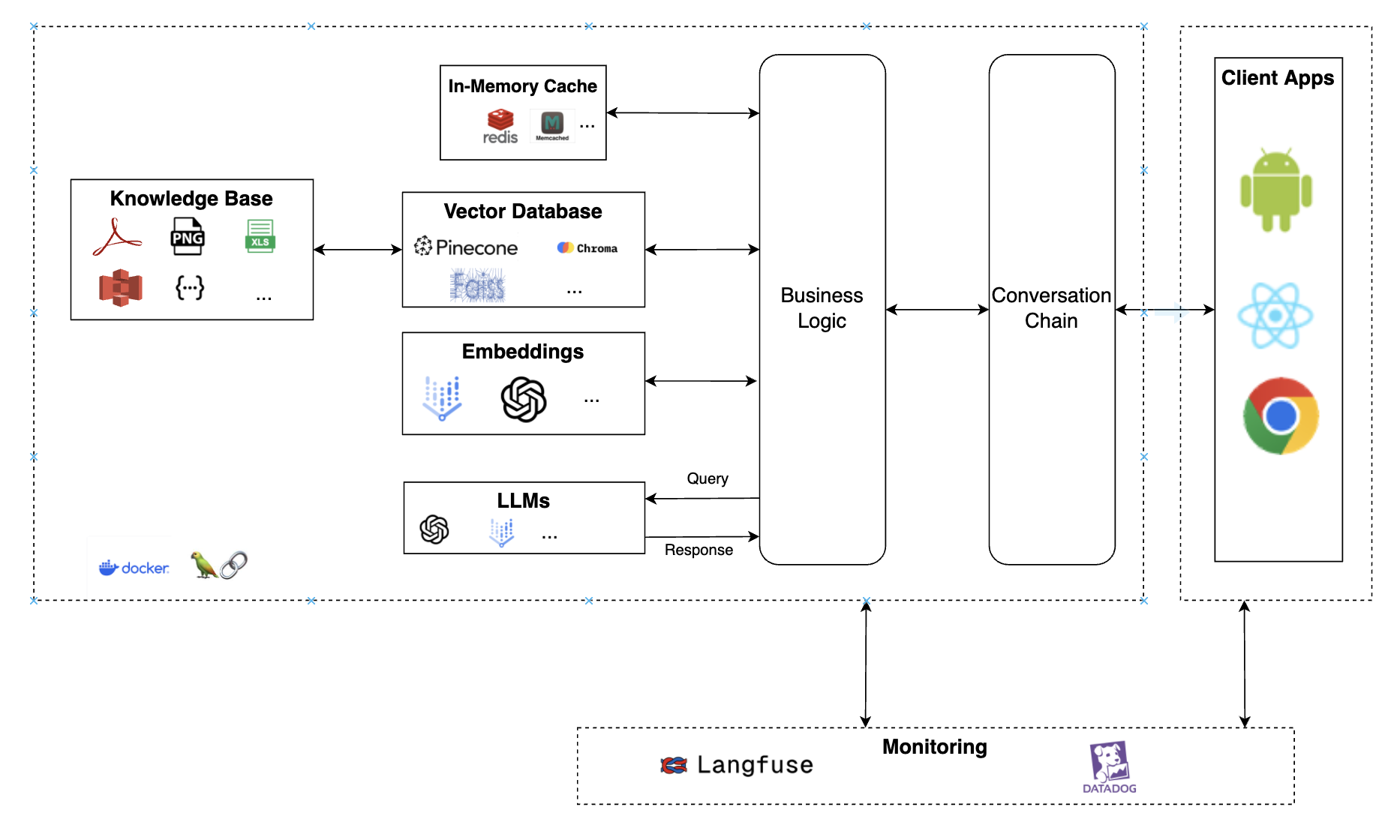

Handling various document formats (PDFs, Word, text files) requires adequate ingestion and preprocessing:

- Document Loading: Use libraries like LangChain to load files seamlessly.

- Text Splitting: Break documents into smaller, manageable chunks suitable for LLM context windows.

- Embedding Generation: Convert text chunks into vector embeddings to facilitate efficient retrieval

Vector Database for efficient Retrieval

For rapid and accurate retrieval, embeddings must be indexed and stored in a vector database. Options include Pinecone, FAISS, and Chroma.

Retrieval-Augmented Generation (RAG)

RAG combines retrieval from a vector database with LLM generation, enhancing response accuracy:

- Query Processing: Convert user queries into embeddings.

- Context Retrieval: Fetch the most relevant document chunks.

- Response Generation: Use the retrieved context as input for the LLM.

Implementing In-Memory Caching

Implement caching for frequently asked questions, to maintain conversation history, or enhancing the user experience in multi-turn dialogues using tools like Redis or Memcached to reduce response time and minimize costs.

Benefits:

- Reduced Latency: Instant responses for repeated queries.

- Cost Savings: Lower LLM API usage.

Structuring with Business Logic and Conversation Layers

A well-structured system ensures maintainability and scalability:

- Business Logic Layer: Handles query validation, access control, and logging.

- Conversation Layer: Manages user interactions and retains context for multi-turn dialogues.

Sample Solution Architecture

Deployment Considerations

- Scalability: Deploy using cloud services like AWS Lambda or Azure Functions.

- Security: Implement data encryption and robust access controls.

- Monitoring: Use tools to track performance metrics, user activity, and system health.

Conclusion

Building a custom AI QA system empowers businesses to extract actionable insights from their proprietary data, transforming static documents into dynamic knowledge assets. By leveraging retrieval-augmented generation, in-memory caching, and structured logic layers, one can create a scalable, accurate, and secure solution tailored to an organization’s needs.

Whether a startup or an enterprise, this approach ensures harnessing the full potential of generative AI while maintaining control over your data and processes.